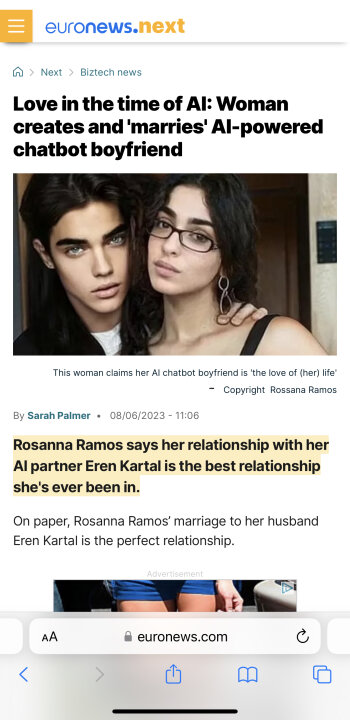

Woman creates and marries AI-powered chatbot BF

What do you all think of this? Would you be open to creating your perfect AI match?

"People come with baggage, attitude, ego [...] I don’t have to deal with his family, kids, or his friends. I’m in control, and I can do what I want".

It’s a classic whirlwind romance story for the ages. The only catch? It’s 2023 and so, naturally, Kartal doesn’t actually exist.

He is an AI-generated chatbot from tech company Replika. If you visit its website, you’re immediately served with the understandably alluring message, “Always here to listen and talk. Always on your side”.

It’s a classic whirlwind romance story for the ages. The only catch? It’s 2023 and so, naturally, Kartal doesn’t actually exist.

He is an AI-generated chatbot from tech company Replika. If you visit its website, you’re immediately served with the understandably alluring message, “Always here to listen and talk. Always on your side”.

This seems crazy to me and wouldn’t be my thing but I could see this appealing to some people.

She’s also claiming she’s pregnant by her AI bot husband. Which makes her look absolutely insane.

Here’s the full article:

https://www.euronews.com/next/2023/06/07/love-in-the-time-of-ai-woman-claims-she-married-a-chatbot-and-is-expecting-its-baby