Is the United States of America a Democracy or a Republic? YES

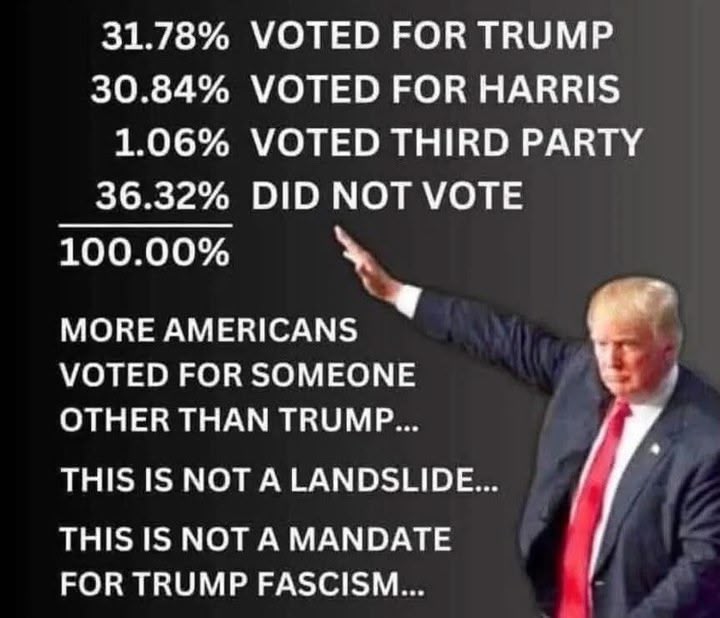

WE ARE a Democracy. Right-wing people frequently say the U.S. is a Republic, not a democracy. Wrong. They are uncomfortable with democracy. They don't like the idea that poor people, working-class people, non-white people, women, any citizen can vote. They want an oligarchy of very wealthy people to control society.

From the Declaration of Independence;

"We hold these truths to be self-evident, that all men are created equal, that they are endowed by their Creator with certain unalienable Rights, that among these are Life, Liberty and the pursuit of Happiness.--That to secure these rights, Governments are instituted among Men, deriving their just powers from the consent of the governed"

See this article from the Fact/Myth site for a discussion of Democracy and Republic:

https://factmyth.com/factoids/the-united-states-of-america-is-a-democracy/

From the Declaration of Independence;

"We hold these truths to be self-evident, that all men are created equal, that they are endowed by their Creator with certain unalienable Rights, that among these are Life, Liberty and the pursuit of Happiness.--That to secure these rights, Governments are instituted among Men, deriving their just powers from the consent of the governed"

See this article from the Fact/Myth site for a discussion of Democracy and Republic:

https://factmyth.com/factoids/the-united-states-of-america-is-a-democracy/